John Carmack muses using a long fiber line as as an L2 cache for streaming AI data — programmer imagines fiber as alternative to DRAM

Summary

John Carmack explores the innovative use of fiber as an L2 cache to enhance AI data streaming. This concept could revolutionize data processing efficiency, showcasing Carmack's forward-thinking approach in the evolving landscape of artificial intelligence technology.

Key Insights

What is an L2 cache, and how does Carmack propose using fiber as one?

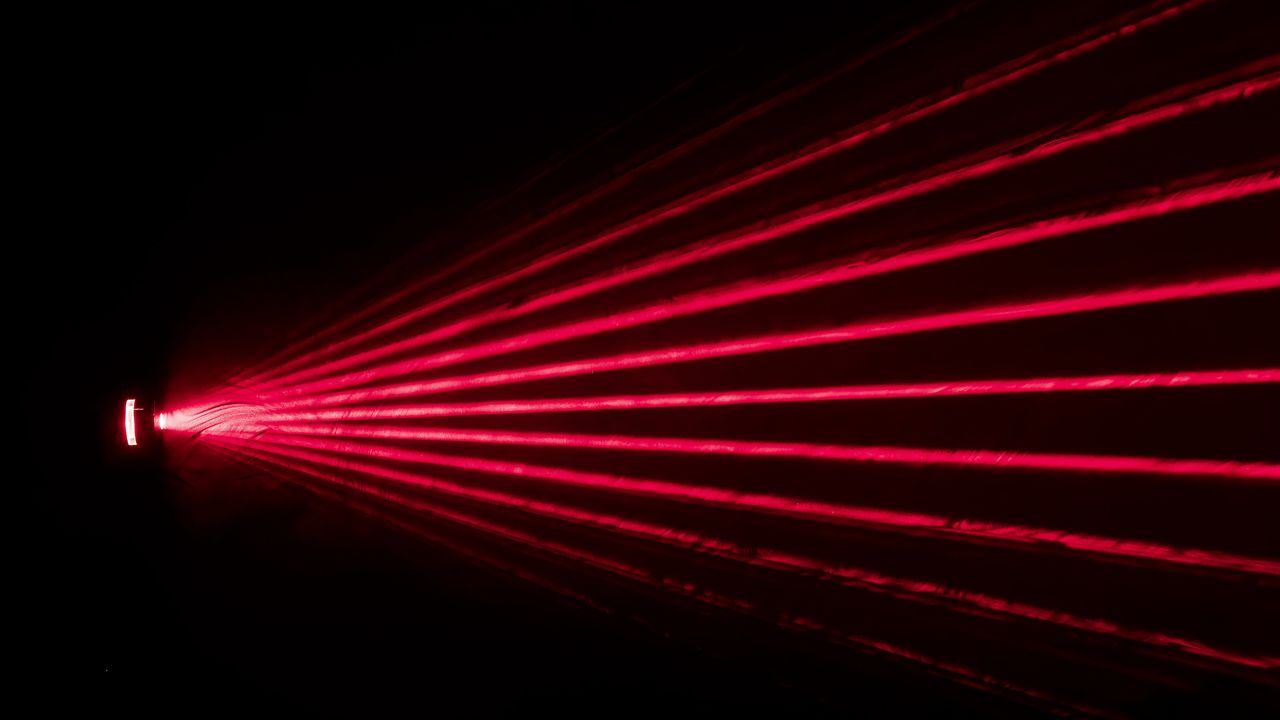

An L2 cache is a type of fast memory located close to the processor that stores frequently accessed data to reduce latency. John Carmack proposes using a long fiber optic loop as an L2 cache alternative for AI data streaming, leveraging its high bandwidth (up to 256 Tb/s over 200 km) and low latency to hold 32 GB of AI model weights sequentially for inference or training.

Sources:

[1]

What are the potential benefits and challenges of using fiber optic cable instead of DRAM for AI data caching?

Benefits include massive bandwidth, near-zero latency for sequential access, significant power savings (light requires far less power than DRAM), and a potentially better growth trajectory than DRAM. Challenges involve high costs for 200 km of fiber and logistical issues, though Carmack suggests flash memory as a more practical alternative.

Sources:

[1]