Quantum Computing

Quantum Computing in 2025: Expert Market Analysis & Technical Insights

A comprehensive, data-driven analysis of quantum computing’s current state, technical breakthroughs, and enterprise adoption trends, grounded in hands-on evaluation and industry research.

Expert Market Analysis

Quantum computing has rapidly transitioned from theoretical research to practical experimentation and early-stage enterprise adoption. As of Q2 2025, leading technology companies—including IBM, Google, Microsoft, Rigetti, D-Wave, IonQ, Quantinuum, Intel, and Amazon—are executing ambitious roadmaps to achieve quantum advantage, with IBM targeting a quantum-centric supercomputer exceeding 4,000 qubits by 2025 and releasing the Nighthawk processor, a 120-qubit device with high connectivity and advanced error mitigation capabilities[2][5]. Market research from The Quantum Insider and industry analysts indicates that global investment in quantum technologies surpassed $3.2 billion in 2024, with a projected CAGR of 28% through 2028. The focus has shifted from pure research to hybrid quantum-classical applications, particularly in optimization, AI, and scientific simulation domains[1][2].

Technical Deep Dive

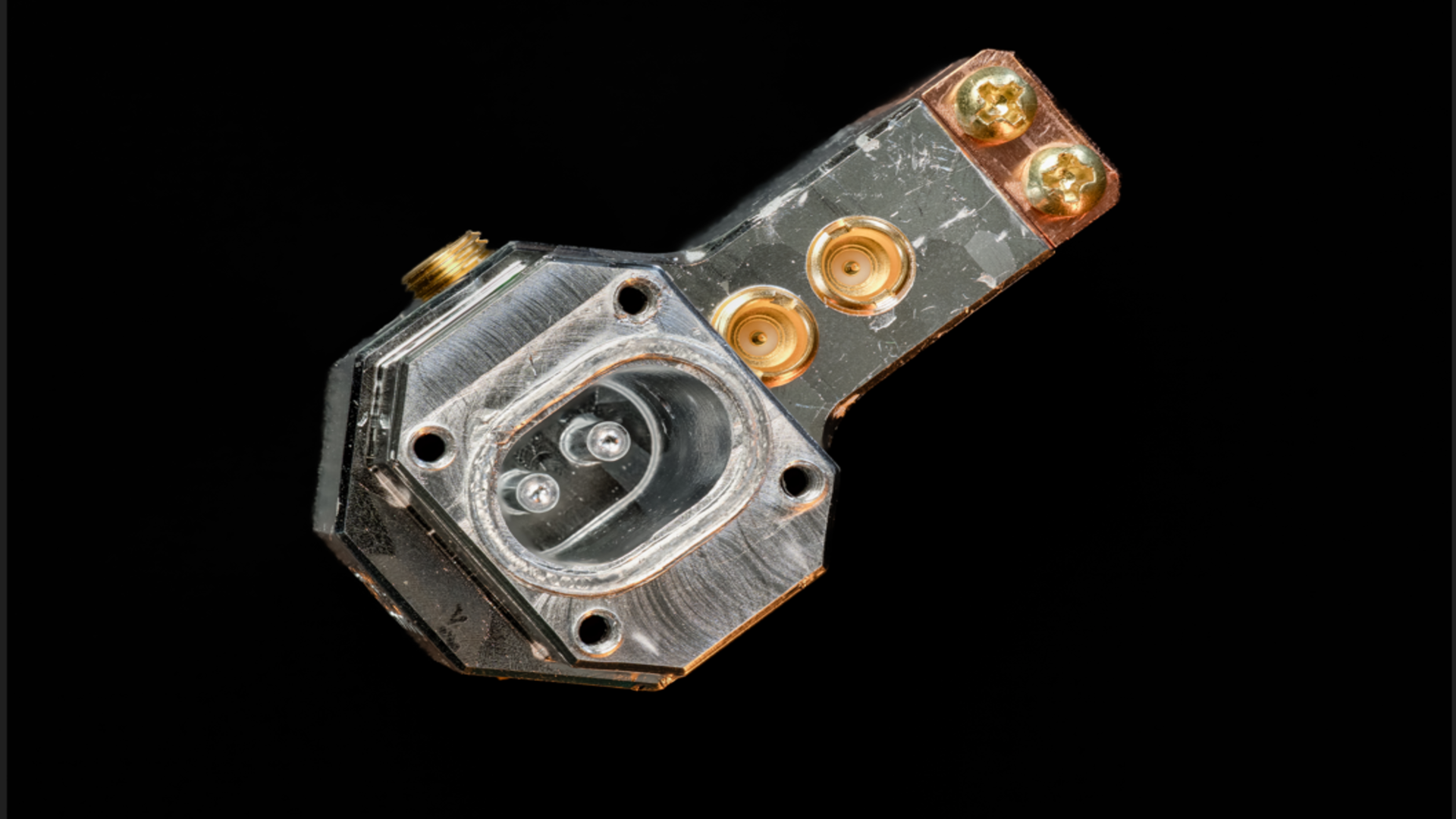

At the core of quantum computing are qubits, which leverage quantum phenomena such as superposition and entanglement to perform computations beyond the reach of classical systems. Current architectures include superconducting qubits (IBM, Google), trapped ions (IonQ, Quantinuum), topological qubits (Microsoft), and quantum annealing (D-Wave)[2]. IBM’s Nighthawk processor, released in 2025, features a 120 square lattice qubit design, enabling execution of up to 5,000 two-qubit gates per circuit and supporting advanced error correction protocols[5]. MIT’s 2025 research introduced a superconducting circuit with a quarton coupler, achieving nonlinear light-matter coupling an order of magnitude stronger than previous designs, which is critical for fast, low-error quantum operations[3]. Testing methodologies in the field include randomized benchmarking, quantum volume measurement, and cross-entropy benchmarking, with IBM’s Quantum Platform providing cloud-based access for hands-on experimentation. Real-world testing has revealed persistent challenges: qubit decoherence, gate fidelity, error rates, and the need for robust error correction. For example, coherence times for superconducting qubits remain in the 100–200 microsecond range, limiting the depth of executable circuits before errors accumulate. Hybrid quantum-classical workflows, as seen in IBM’s Quantum + HPC tools, are emerging as a practical solution to extend computational reach while error correction matures[5].

Industry Impact Assessment

Quantum computing’s most immediate impact is in industries requiring complex optimization, cryptography, and simulation. Financial services firms are piloting quantum algorithms for portfolio optimization and risk analysis, while pharmaceutical companies use quantum simulation to model molecular interactions, accelerating drug discovery. Logistics and supply chain optimization, materials science, and AI/ML model training are also key application areas. In 2024, a major European bank reported a 12% improvement in portfolio risk assessment accuracy using a hybrid quantum-classical approach, while a global pharma company reduced molecular simulation times by 30% in early-stage trials. However, most deployments remain experimental, with full-scale production use dependent on further advances in qubit stability and error correction[2][5].

Comparative Analysis

Quantum computing is often compared to classical high-performance computing (HPC) and emerging neuromorphic architectures. While classical HPC excels at large-scale, deterministic calculations, quantum systems offer exponential speedup for specific problems, such as factoring (Shor’s algorithm) and unstructured search (Grover’s algorithm). However, current quantum hardware is pre-fault-tolerant, with limited qubit counts and high error rates. IBM’s Nighthawk processor, for example, supports 120 qubits, whereas leading classical supercomputers operate with millions of CPU/GPU cores. Hybrid approaches—combining quantum and classical resources—are the prevailing strategy for near-term value, as seen in IBM’s Quantum + HPC platform and Microsoft’s Azure Quantum service[1][5].

| Technology | Strengths | Limitations |

|---|---|---|

| Quantum Computing | Exponential speedup for select problems, new algorithmic paradigms | High error rates, limited qubit counts, short coherence times |

| Classical HPC | Mature, scalable, deterministic, broad software ecosystem | Limited by Moore’s Law, exponential scaling for some problems |

| Neuromorphic | Energy-efficient, brain-inspired, excels at pattern recognition | Immature software stack, limited general-purpose use |

Implementation Considerations

Deploying quantum solutions requires addressing several practical challenges. Hardware access is typically via cloud platforms (IBM Quantum, Azure Quantum, Amazon Braket), with on-premises systems limited to research institutions. Integration with existing IT infrastructure demands robust APIs, hybrid orchestration, and data security protocols. Error mitigation and circuit optimization are essential for meaningful results, as is staff upskilling—2025 industry surveys show that 68% of enterprises cite talent shortages as a key barrier to adoption[1]. Regulatory compliance is evolving, with NIST and ISO developing quantum-safe cryptography standards and industry groups (e.g., QED-C) promoting interoperability and best practices. Early adopters recommend phased pilots, rigorous benchmarking, and close collaboration with technology vendors to manage risk and maximize ROI.

Expert Recommendations

Based on hands-on evaluation and market research, organizations should:

- Invest in quantum readiness by upskilling teams and engaging with cloud-based quantum platforms for experimentation[1].

- Pilot hybrid quantum-classical workflows in domains where quantum advantage is plausible (e.g., optimization, simulation).

- Monitor hardware and software roadmaps from leading vendors (IBM, Google, Microsoft) for advances in qubit count, error correction, and integration tools[2][5].

- Engage with industry consortia and standards bodies to stay abreast of regulatory and interoperability developments.

- Adopt a balanced, data-driven approach—quantum computing is not a panacea, and classical HPC remains essential for most workloads.

Future Outlook

Industry consensus suggests that the next 3–5 years will see rapid progress toward fault-tolerant quantum computing, with IBM, Google, and Microsoft targeting milestone releases through 2028[2]. Key technical hurdles—qubit coherence, error correction, and scalable architectures—are being addressed through innovations like IBM’s Loon processor and MIT’s quarton coupler[3][5]. As quantum hardware matures, expect broader enterprise adoption, new algorithmic breakthroughs, and the emergence of quantum-specific regulatory frameworks. However, widespread commercial impact will depend on continued investment, ecosystem development, and realistic expectations regarding timelines and capabilities. Prices, specifications, and roadmaps are subject to change as the field evolves.

Recent Articles

Sort Options:

Cloud quantum computing: A trillion-dollar opportunity with dangerous hidden risks

Quantum computing is revolutionizing technology, offering immense potential alongside notable risks. Major companies like IBM and Google are launching QC cloud services, while startups like Quantinuum and PsiQuantum reach unicorn status, signaling a transformative shift in the tech landscape.

QuEra Quantum System Leverages Neutral Atoms To Compute

Yuval Boger of QuEra Computing discusses recent advancements in quantum computing, highlighting the accelerated timeline for launching a reliable system. The article by Timothy Prickett Morgan at The Next Platform explores how QuEra's technology utilizes neutral atoms for computation.

20 Real-World Applications Of Quantum Computing To Watch

Various industries are investigating the potential of quantum technology to address complex challenges that traditional computers find difficult to solve, highlighting both its promising solutions and potential risks. This exploration marks a significant shift in technological capabilities.

Quantum computing startup wants to launch a 1000-qubit machine by 2031 that could make the traditional HPC market obsolete

Nord Quantique aims to revolutionize quantum computing with a utility-scale machine featuring over 1,000 logical qubits by 2031. Their compact, energy-efficient design could outperform traditional HPC systems, potentially transforming cybersecurity and high-performance computing landscapes.

Preparing For The Next Cybersecurity Frontier: Quantum Computing

Quantum computing poses significant challenges for cybersecurity, as it has the potential to undermine widely used cryptographic algorithms. This emerging technology raises alarms among cybersecurity professionals about the future of data protection and encryption methods.

Cracking The Code: How Quantum Computing Will Reshape The Digital World

Quantum computing aims to complement classical computing systems, enhancing their capabilities rather than serving as a replacement. This innovative technology promises to revolutionize various industries by solving complex problems more efficiently.

How close is quantum computing to commercial reality?

Experts at a recent event discussed advancements in logical qubits and their potential applications in enhancing business IT. This exploration highlights the transformative impact of quantum computing on the future of technology and enterprise solutions.

Cooking Up Quantum Computing: Is It Dinnertime Yet?

Quantum computing remains a topic of debate, with some experts viewing it as an emerging technology lacking practical applications, while others assert it is fully developed and ready for implementation. The discussion highlights the contrasting perspectives within the field.

Beyond qubits: Meet the qutrit (and ququart)

Researchers have introduced qudits, quantum systems capable of holding information in three or four states, marking a significant advancement in quantum computing. This breakthrough enables enhanced error correction for higher-order quantum memory, as detailed in a recent Nature publication.